questionet

Lec 02 - Image classification 본문

Summary

image classification is a fundamental building block of different algorithms

like object detection (draw boxes around the objects), image captioning

the example of the process of image classification

1 input : image (like a cat)

2 algorithm : perform image classification

(giving category label to objects and images)

3 output : image is categorized (cat labeled)

what exactly computer do?

at each pixel computer calls value which is represented with three numbers between 0 and 255

(i.e. just a big grid of numbers between [0, 255] represent the image.

e.g. 800 x 600 x 3 (3 channels RGB)

problems

(related to common-sense reasoning human do, unassuming problems)

1. there is a semantic gap

difference between what we understand when we look at the cat image

and what is represented in the grid of pixel values for a computer.

for example, all pixels change when the camera moves.

then how does the number have a constant meaning?

how could computer understand that

changed numbers of the grid are still a cat?

so we have to design algoritgms that are robust to the massive changes

in the raw pixel values

2. intraclass classification

even though there are various cats

(about sizes, colors, appearance featuers...),

computer could tell us "a cat is a cat" raw pixel values

3. fine-grained categories

computer also could answer a question :

" what kind of a cat? (different breeds)

4. background clutter(mess),

illumination changes,

deformation(different poses)

also we need classifiers to be robust to these factors

5. occlusion

computer should classify an image which might not be visible hardly

e.g. small part of a whole body, hidden by obstacles...

how can we handle these problms?

edges in images are really important so we might probably do first

take the image

then convert it

then extract edges (using some kind of edge detection algorithm)

then find corners or other type of interpretable patterns in those edges

(in case of a cat, from triangular point of ear, draw out rules about what angles cat's ears are allowed to be...)

then hard coding(explicitly programming :

tell computer exactly what steps it needs to perfrom in order to produce the output that you want it to produce

but it's bad approach

because we can't all features of the things under various conditions

Data-Driven approach

machine learning takes Data-Driven approach

: algorithms that can learn from data

1. collect a dataset of images and labels

2. use Machine Learning to train a classifier

(learn statistical dependencies between the input images and

the dataset and the output labels)

3. evaluate the classifier on new images

two parts of data-driven algorithm

instead of writing single monolithic function called 'classify image'

we have two piece API

1 one function called 'train'

(using data set and labels, making some statistical model)

2 another one predict function

(put a new image, spit out the labels as they have been learned from the training set)

in Data-Driven approach,

what we're basically doing is programming the computer via the data that we feed it in

all we need to do is collect a new data set and feed it

Nearest Neighbor

NN is a first classification algorithm, first classifier

but it might not deserve the name of learning algorithm.

because for NN the train function is trivial, it just memorizes all the training data.

there is no process called 'train'

components of NN

NN train function : Memorize all data and labels (just memorize training data)

NN predict function : Predict the label of the most similar training image

process of NN

1 take our new image that we want to predict a label for

2 compare it to each one of our images in the training set

(using some kind of comparison or similarity function)

3 return the label of the most similar training image

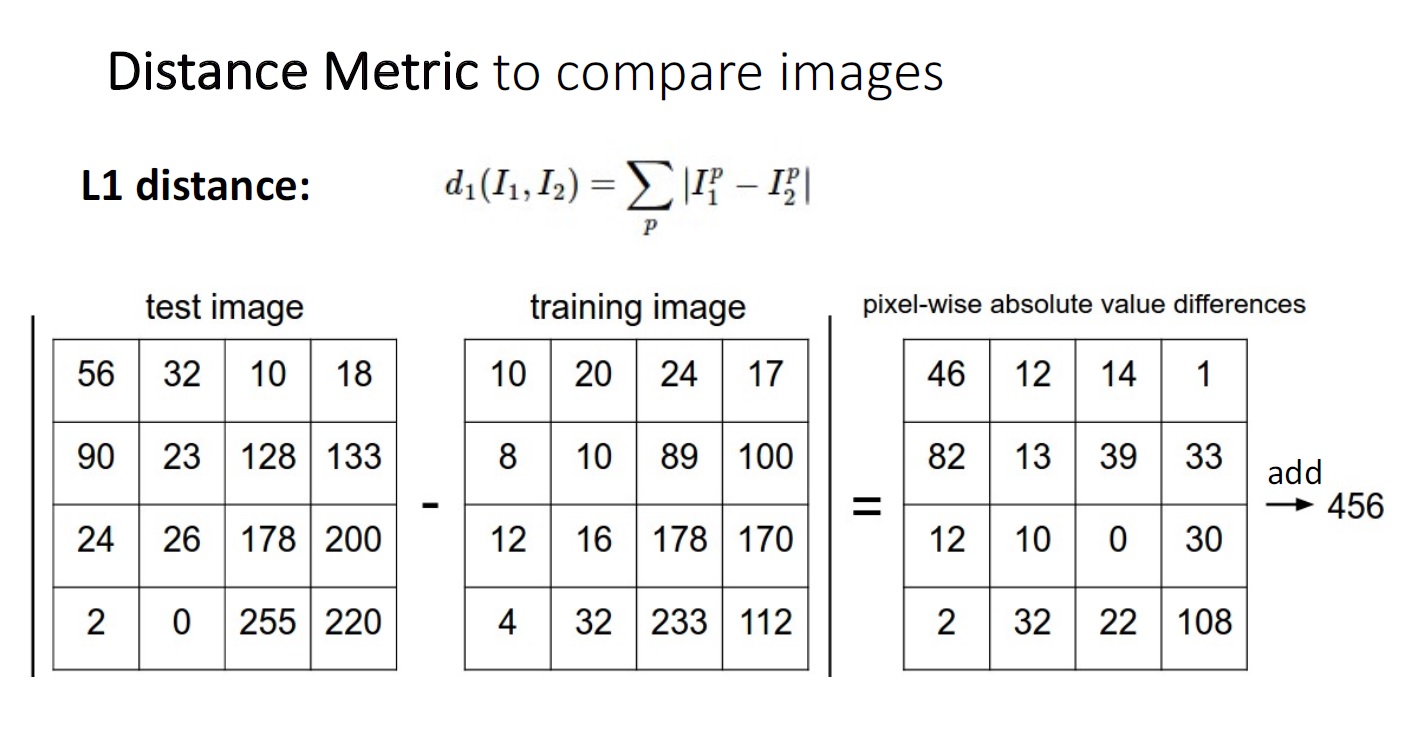

distance metric

NN needs a function that can compute the similarity

between two input images

one of basic similarity functon is L1 distance (Manhattan distance)

: sum of absolute value difference

'distance is going to zero' means two images are exactly going to the same

(only computer's point of view, remember 'problems' above)

even though images can look very visually similar as measured by L1 depth,

they actually can sometimes have very different semantic meaning

opposite characteristics of machine learning system

NN needs a kind of constant time training,

which means there is no need training time.

i.e. the speed of learning or training is meaningless.

of course if you were to make a deep copy that maybe linear time

but, in case of 'testing',

NN needs linear time. testing time depends on the size of examples.

because NN needs to compare every testing example to each of the training examples.

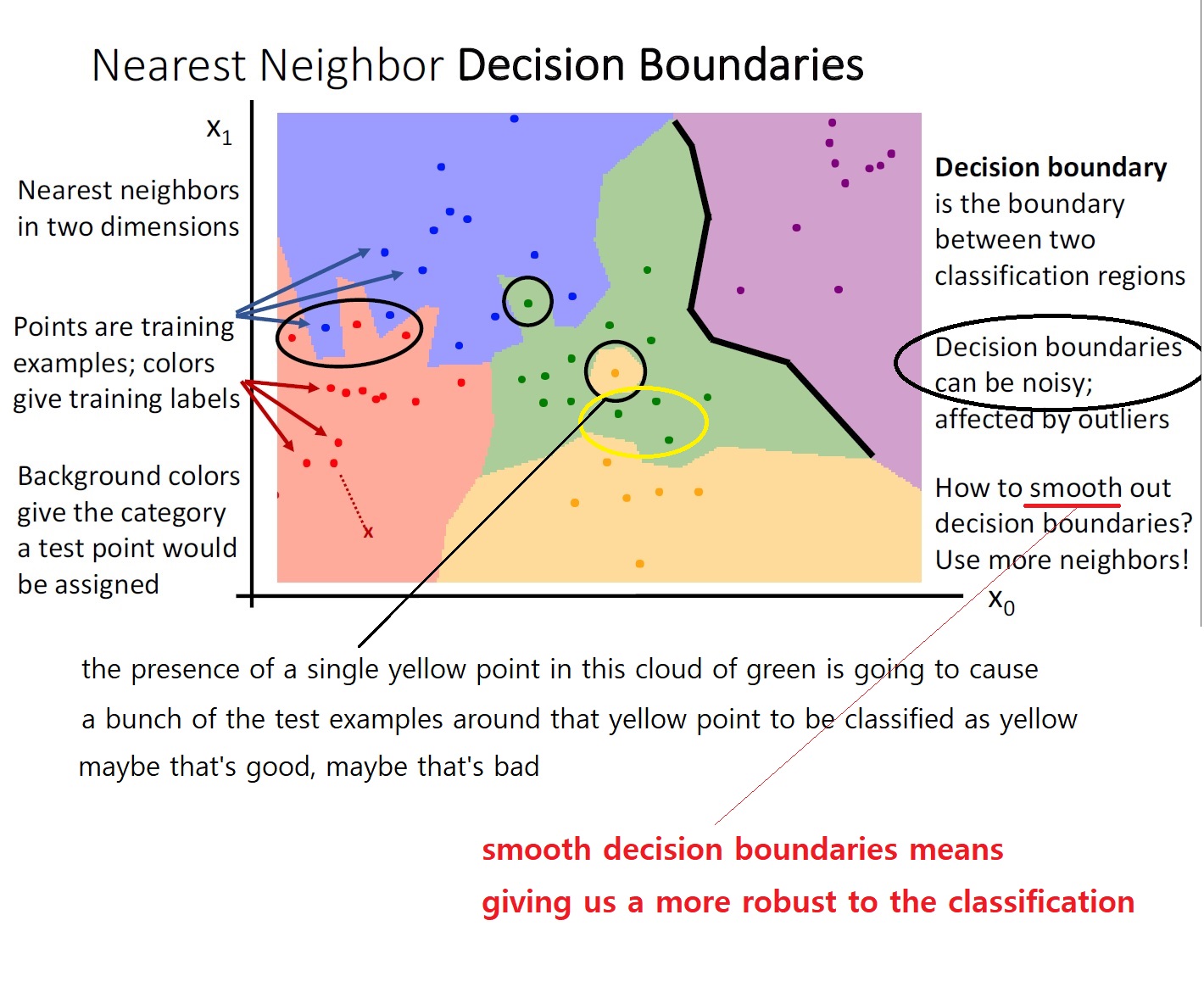

decision boundaries

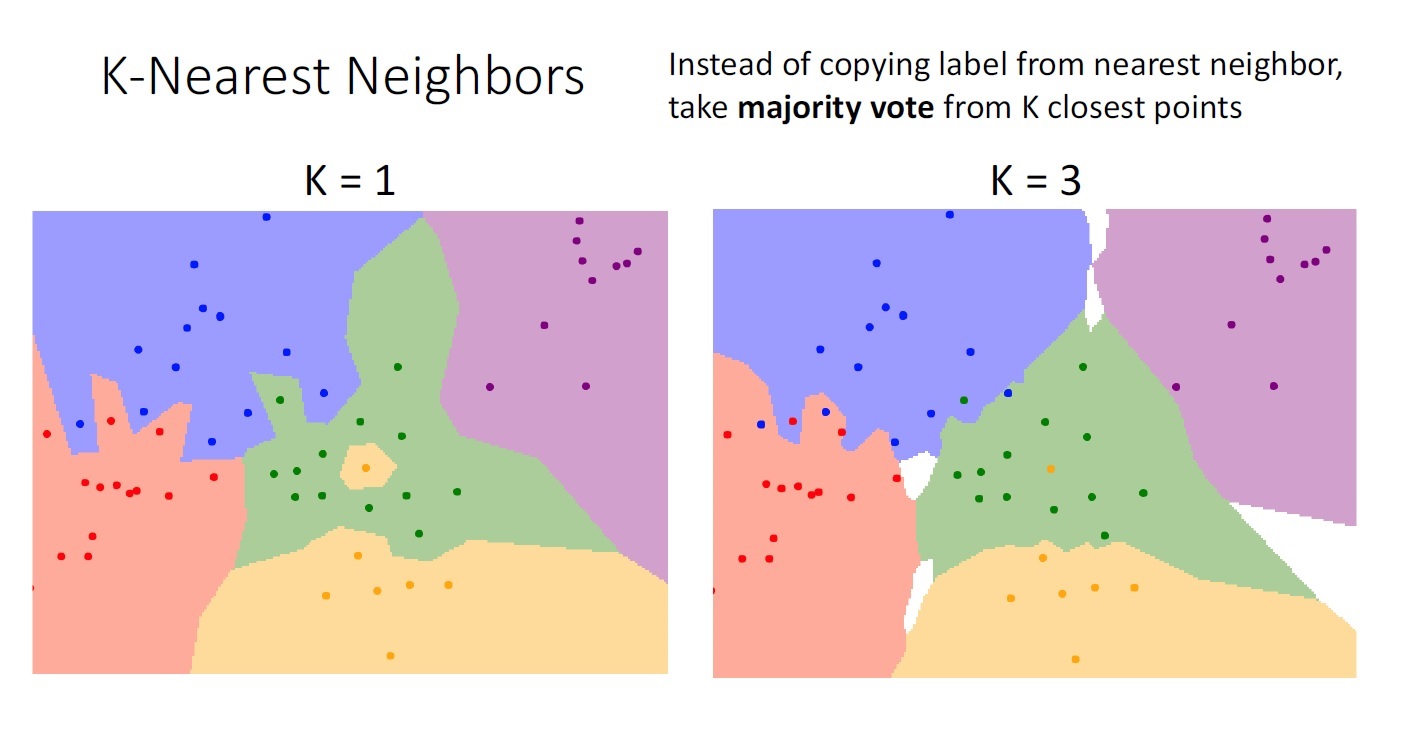

KNN

idea of KNN

he closest colored dots(labeled examples) nearby decision boundaries decide

the region of color(category which test examples would be assigned) near decision boundaries by voting (the number of the voters = K )

the effect of KNN

1 more smooth decision boundaries = more robust classifier

2 reduce the effect of outliers

hyper parameter

we need to choose a value of K

(how many deffierent neighbors are going to consider)

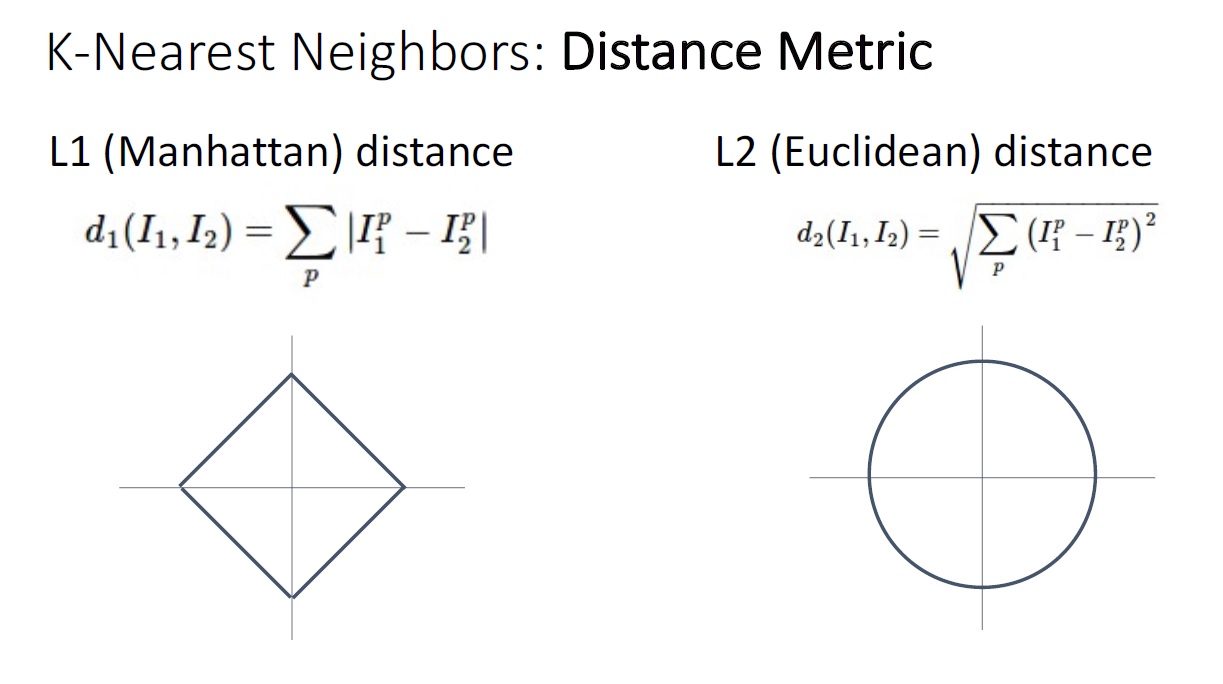

we also need to choose a distance metric

choice of K and the distance metric are examples of what we call

'hyper parameters'

difficulty of choosing hyper parameters

1 learning algorithms can't learn hyper parameters directly from the training data

2 we can't decide which hyper paremeters are best on our data before try different values and see how is going on

even though hyper parameters are very problem dependent

there are some hints.

1. do not choose hyperparameters that work best on data

some hyper parameter will cause our learning algorithm to give us the highest accuracy on our training set

considering accuracy only seems reasonable but actually terrible idea.

never do this

e.g. K=1 always works, perfectly on training data

but, k =1 it will try to find the nearest training point which is itself

and then it will always return the correct label

2. do not just simply split data into train set and test set

do not use the test set for choosing hyperparameters

because it will draw incorrect conclusions about perfomance of your

learning algorithm (overfit problem)

once you look at the test set,

your algorithm is polluted with knowledge of that test set

once you use the test set to set values of your hyper parameters,

the test set is no longer unseen data

이렇게 되면 test set을 통해 알고리즘이 낸 퍼포먼스를 바탕으로

new data, unseen data 에서도 같은 퍼포먼스를 낼 거라 기대할 수 없다

왜냐하면 test set 을 train set처럼 쓴 것이나 다름 없기 때문이다.

this is fundamental cardinal sin in machine learning models

3. Split data into train, validation, and test set

then choose hyperparameters on vallidation set and evaluate on test set

use validation sets

: to set the value of hyper parameters

reserve test sets

: to use only once at the very end to evaluate hyper parameters performance

(it will gives you a very proper estimate of your algorithms performance

on truly unseen data)

it might be terryfying

because the performance number might be terrible which means

my entire life's work has been wasted on this shit

but you have to do this

accept it and start all over again

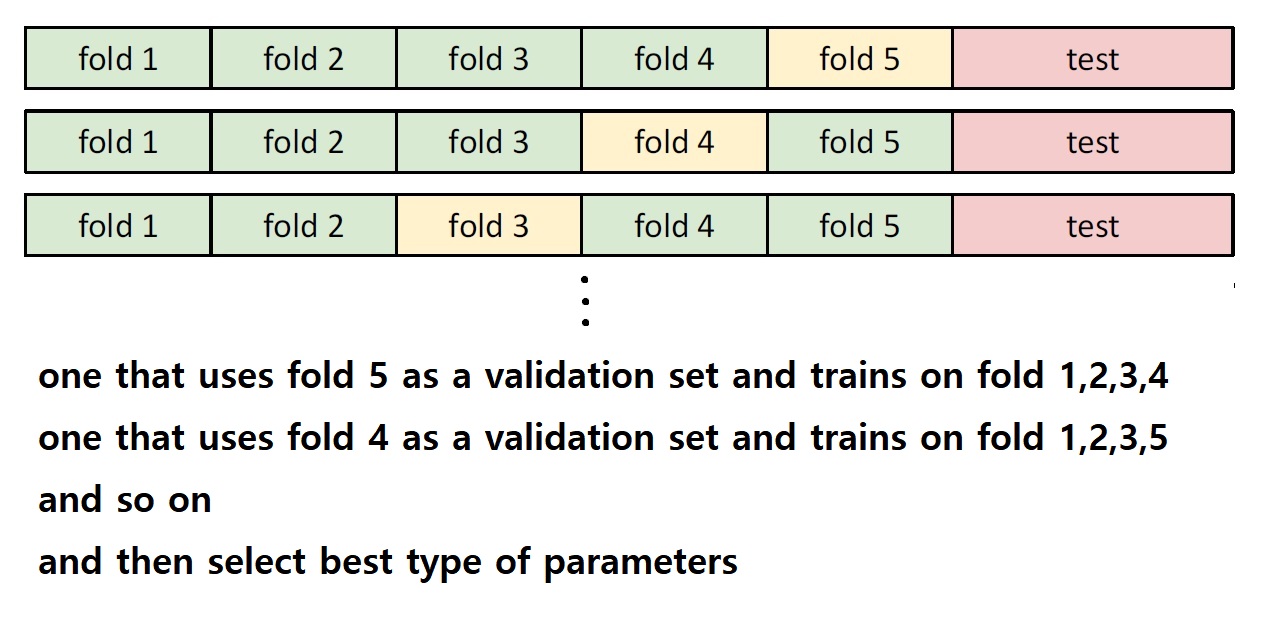

4 Split data into many many different chunks, called folds,

try each fold as validation and average the results

this is called Cross-Validation

this is the most robust way yo choose hyper parameters

but it's fairly expensive

so it is useful small data sets,

that's why it is (unfortunately) not used too frequently in deep learning

REFERENCE

1 Universal Approximation : anotehr interesting feature of KNN algorithm

: as the number of training samples goes to infinity,

nearest neighbor can represent any(*) function

according to this,

NN is the only learning algorithm we need it can represent any function

all we need to do is collect a lot of data

but there is a catch

the catch is called the curse of dimensionality

the problem is that

in order to get a kind of uniform coverage of the full space of a training set,

we need a number of training samples which is exponentially with dimension

for example

the number of binary 32 x 32 pixels image is 2**32*32,

it's approximately 10**308,

it's tremendous number

this is in fact one reason why a NN algorithm is very rarely used on raw pixel

1 it's very slow at test time

2 it's very difficult to get enough data to cover the space of all possible

images

3 distance metrics on raw picel values are just not very semantically

meaningful

however NN with CNN works well <<<HOW?

ex) image cationing

2 another similarity functon is L2 distance, Euclidean distance

With the right choice of distance metric, we can apply K - Nearest Neighbor to any type of data

3 the problem of KNN

when K is greater than 1, there might be ties between classes (white regions in the picture)

>>> nearest neighnors의 투표 결과, 동점을 받은 data가 발생한 경우

그 지역은 catrgory label을 가지지 못한다.

so you need some mechanism for breaking ties

4 examples of image data set

1 MNIST 0 ~ 9 handwritting numbers gray scale images

2 CIFAR10 10 things (airplane, automobile, bird, cat, deer, dog, frog, horse, ship, truck ) color images

<for homework>

3 CIFAR100 20 superclasses with 5 classes each (Trees: Maple, oak, palm, pine, willow)

4 ImageNet 1000 classes

<for research paper>

5 MIT Places

6 Omniglot

1. image classification algolithm 의 핵심.

data-driven approach에 기반한 image classification

카테고리를 나눠서 데이터마다 레이블을 붙이는 것.

이렇게 레이블 된 데이터들을 가지고 training set 을 만든 다음

훈련을 거쳐 새로운 이미지를 던져줬을 때

우리가 맞혀주길 바라는 레이블을 내놓게 하는게

image classification algolithm 의 핵심.

이제 문제는 어떻게 훈련을 시키느냐 하는 것이다.

어떤 알고리즘을 써서 분류하게 할 것이냐.

2. NN과 KNN의 핵심

첫번째로 NN (Nearest Neighbor) 알고리즘의 경우를 생각해보자.

NN의 유사도 함수는 두 이미지의 각 픽셀들의 차이를 구해서 합한다음

그 값이 적을수록 두 이미지가 같은 것으로 해석하는데 쓰인다.

NN의 경우엔 프로그램을 실행하는 시점에 즉시 유사도 함수를 이용해 분류를 진행하므로

훈련이라는 말에 의미가 없지만

레이블된 데이터셋을 가지고 예측을 수행한다는 점에선

머신러닝의 중요한 개념 중 일부를 보여주기에

의미가 있다.

두번째로 KNN (K - Nearest Neighbor) 알고리즘의 경우를 생각해보자

k 값이 1보다 크다는 건,

어떤 카테고리에 레이블된 데이터들이

자신들과 가까이 있는 새로 주어진 데이터가 자신들이 속해있는 카테고리로 포함되는게 맞다고 투표하게 했을 때

투표자가 1명 보다 많게 한다는 걸 의미한다.

직관적으로 보면 효과적인 아이디어라고 할 수 있다.

비슷한 것끼리는 비슷할 확률이 높으니까.

하지만 치타들 사이에 표범이 있는데 그걸 치타라고 대답하게 만드는 결과를

낳을 수 있지 않을까?

k 값을 높였을 때 decision boundary가 smooth해 질 때도 같은 선상에서 생각해볼 수 있다.

k 값이 낮아 요철이 심했던 경계가 k값을 높임으로써 부드러워진다는 건

단순히 distance metric에 의한 유사도만을 가지고 새로운 데이터의 정체를 판단하는 KNN의 알고리즘에 비추어봤을 때

고려해야할 변수가 굉장히 많은 raw image를 인식, 분류는 일을 아주 훌륭히 해낼 수 없다는 걸

스스로 증명하는 꼴처럼 느껴진다.

어떤 문제에 대한 어떤 답을 원하느냐에 따라 그 효용성이 다를테므로

적절한 k값과 distance metric을 취한 KNN이 반드시 나쁘다고만은 할 수 없다.

강의에서도 NN이 CNN 과 만났을 때 어떤 과제들을 굉장히 잘 수행해낸다고 했으니까

(도대체 둘이 어떻게 합쳐져서 어떻게 굴러가는 걸까? 매우 궁금하다)

decision boundary를 부드럽게 하는 이유는

컴퓨팅 파워가 낮은 경우,

너무 완벽한 답을 찾지 않아도 되는 경우,

빨리빨리 적당한 답을 내놔야 하는 문제일 경우 등과 같은 상황의

필요에 의한 것 같다.

(또 다른 이유가 뭐가 있을까?)

3. 데이터를 test set, validation set, train set 으로 나눠야 하는 이유

훈련을 통해 내가 찾은 하이퍼 파라미터가 적절한지,

좋은 예측/분류를 하는지 제대로 평가하기 위해서.

여기서 '제대로 평가한다'는 게 무슨 뜻인지 이해하는 게 중요하다.

예컨대 따로 떼어 놓은 최종 test set으로 train을 하거나 validation 과정을 수행하면

train, validation으로 이미 한 번 써먹은 test set을 최종 평가에 또 쓰게 된다.

이렇게 되면 최종평가로서의 test가 의미가 없어지게 된다.

한번도 보지 못한 데이터에 대한 퍼포먼스를 확인해야 하는데 이미 훈련이나 검증에 써먹은 데이터를

테스트셋으로 써버리면 퍼포먼스를 신뢰할 수 없기 때문이다 (오버핏이 되기 쉽다 이거지)

4. Cross Validation을 하는 이유 (문석빈님의 답변)

하이퍼파라미터 개수에 따라 검증셋을 맞춰서 최적의 k값을 찾기 위해서

4. Universal Approximation

존재 가능한 모든 경우의 수를 다 커버하는 샘플들로 이루어진 training set 이 있다면

NN은 인간과 거의 동등하거나 그 이상의 능력으로 classification 또는 regression을 할 수 있을 것이다.

이게 바로 as the number of training samples goes to infinity, nearest neighbor can represent any(*) function. 의 의미.

하지만 일견 생각해봐도 이는 매우 어려운 요구다.

5. Curse of dimensionality

차원의 저주. image가 가진 binary 정보만 놓고 보아도

어마어마한 수의 bit들을 컴퓨터가 처리해야만 한다.

the number of binary 32 x 32 pixels image is 2**32*32, approximately 10**308.

벡터가 이 정도인데 매트릭스, 텐서로 나아가면 어마무시한 숫자들이 나오게 될테니까.

6. Robust (백혜림님의 답변)

robust는 단단하고 잡음이 많이 섞여있는 데이터를 안정화하는 개념

잡음이 많이 섞어있는 데이터에서 잡음을 많이 제거하고 평균적인 값으로 간다는 개념

어떤 특이한 값에도 영향을 받지않은 중앙값으로 가는 것을 robust

다른 값들에 비해 지나치게 크거나 지나치게 작은 이상한 값이 들어와도 영향을 받지 않는다는 것이 robust

한마디로 noise에 강하다는 뜻.

KNN에서 k 값을 높여서 decision boundary의 요철이 부드러워지게 만드는 건, 보다 일반적인 경우에 대체로 잘 동작하게 만든 것이므로 robust하다는 표현을 쓸 수 있다. 단 이때 robust가 좋은 의미인지 나쁜의미인지는 별개의 문제다.(이영석님의 답변)

즉 ML에서 무엇이 robust하다는 게, 항상 좋기만 한 건 아닐 수 있다.

7.NN의 문제는 계산량이 많다는 것.

계산량을 줄이기 위한 방법이 연구중이다

8. NN은 데이터 차원이 낮을 때 좋다.

행렬보단 벡터일 때.

'Learning questions > 기초 개념' 카테고리의 다른 글

| 넘파이, 텐서플로에서 '차원', '축' 의 개념 (0) | 2021.02.02 |

|---|---|

| Linear classifier (0) | 2021.01.17 |

| validation set (dev set)이 필요한 이유 (0) | 2021.01.04 |

| Lec02 요약 (0) | 2021.01.04 |

| Lec 01 - Deep Learning for Computer Vision (0) | 2021.01.01 |